Today in the United States, more than a third of adults have a college degree, compared to fewer than five percent of adults at the time of World War II, representing a dramatic change in what people do when they reach adulthood.1 This year alone nearly two million people in the United States will earn their bachelor’s degrees.2 Our country’s success in promoting a college education would be something to celebrate, if not for one big, embarrassing blemish: those who are already privileged are the most likely to get to and through college, while the underprivileged do not.

The disparities by race and income are stark. High school students from the top fourth of family income are four times as likely to have earned a bachelor’s degree ten years out of high school than those in the bottom fourth of family income.3 In the adult population at large, African Americans are half as likely, and Latinos one-third as likely, as Asian Americans to have at least a bachelor’s degree.4 And the average financial gain that comes with a college degree—or, more accurately, the financial penalty that comes from not graduating—is as large as it has ever been, making the consequences of the inequality more severe.

This blemish—more like a blight, really—threatens not only America’s self-image as the land of opportunity, but undermines our nation’s civic health. A country in which the wealthy and powerful pass their privilege down to their offspring, leaving everyone else behind, is an aristocracy, not a democracy.

We were warned this might happen. In 1947, a panel commission by President Truman cautioned that education might not solve inequality but instead make it worse:

We have proclaimed our faith in education as a means of equalizing the conditions of men. But there is a grave danger that our present policy will make it an instrument for creating the very inequalities it was designed to prevent. If the ladder of educational opportunity rises high at the doors of some youth and scarcely rises at the doors of others, while at the same time formal education is made a prerequisite to occupational and social advance, then education may become the means, not of eliminating race and class distinctions, but of deepening and solidifying them.5

Exactly what the Truman Commission feared has come to pass: a college degree has become the primary route to economic security, while getting the degree is virtually assured for the rich and rare for the poor.

Increasing rates of completion would make a difference: if all of the adults who had started college completed a degree, the gap between African American and whites would be nearly 40 percent lower than it is today (a decline from a 12.3 percentage-point gap to 7.6 percentage points).6

There are many reasons students do not make it all the way through to a college degree. The most prominent is simply the price—the time and money it takes to get a college degree can be an insurmountable hurdle for low-income students, even those who receive relatively generous financial aid packages. Students with weaker academic skills face additional barriers when they are placed in courses that make the degree an even more distant goal. And the social and psychological challenges to students’ attempts to fit in on college campuses take their toll as well. In the end, some students, faced with the multiple stresses of this new environment, find it extremely difficult to do the one thing that is most important: engage with their coursework.

This report takes a look at how government officials have pressed college accreditors to focus more on “student outcomes”—quantifiable indicators of knowledge acquired, skills learned, degrees attained, and so on. It then argues that it is not these enumerated outcomes that are the best way to hold colleges accountable, but rather the evidence of student engagement in the curriculum—their papers, written examinations, projects, and presentations—that holds the most promise for spurring improvement in higher education. Furthermore, this engagement is also a key factor in keeping students in school all the way to graduation. The report concludes that reformers seeking to enhance college performance and accountability should focus not on fabricated outcome measures but instead on the actual outputs from students’ academic engagement, the best indicators of whether a college is providing the quality teaching, financial aid, and supportive environment that make higher learning possible, especially for the disadvantaged.

This report is the first of a series from The Century Foundation, sponsored by Pearson. The views and opinions expressed in this paper are those of the authors and do not necessarily reflect the views or position of Pearson. The series grew out of an August 2014 conference at which researchers and several university presidents were exploring new paths to diversity in higher education in light of emerging legal constraints on race-based affirmative action. As participants discussed ideas to ensure access for low-income and minority students, university leaders were equally concerned about how to improve rates of college graduation by disadvantaged students.

The Current Misguided Focus on Outcomes

As part of his State of the Union address in 2013, President Obama recommended that funding to colleges be contingent on “student outcomes.”7 Since then, there has been a bipartisan drumbeat in favor of outcomes from colleges, and standards from the independent accreditors that decide whether a college is good enough to get federal support. U.S. senator and presidential candidate Marco Rubio wants to reward colleges that demonstrate “high student outcomes.”8 The editorial board of The Wall Street Journal has chimed in, too. Complaining that accreditors focus too much on inputs—such as the number of books in the library—rather than on outcomes, they want the federal government to bypass accreditors and adopt “simple, clear standards” to cut off federal funding from bad colleges.9 In a November announcement of its plans to reform accreditation, the U.S. Department of Education used the word outcome or standards thirty-one times (student outcomes, institutional outcomes, outcomes-based reviews, outcomes-driven accountability, outcome measures, outcomes-driven oversight, critical outcomes data, outcomes-directed measures, key outcomes, outcome standards, achievement standards, accreditor standards, recognition standards, and more).10 Recently, the Department of Education announced it will insist that accreditors adopt “strong and meaningful outcome standards.”11

We have heard the outcomes chorus before, ten years ago, when Secretary of Education Margaret Spellings made similar demands, ultimately gaining pledges from the accreditors that they would focus more on outcomes in their reviews of colleges.12 Secretary Spellings was simply tapping into a movement in higher education that was gaining momentum at the time, without knowing that its momentum actually came from rolling downhill.

The Birth of Outcomes, and How They Went Awry

In the 1990s, reformers thought they could improve teaching and learning in college if they insisted that colleges declare their specific “learning goals,” with instructors defining “the knowledge, intellectual skills, competencies, and attitudes that each student is expected to gain.”13 The reformers’ theory was that these faculty-enumerated learning objectives would serve as the hooks that would then be used by administrators to initiate reviews of actual student work, the key to improving teaching. The logic went like this:

- Step 1. Faculty members declare their goals for students, what became known as “student learning outcomes,” or SLOs.

- Step 2. Observers seek evidence of whether students met those goals, what became known as “assessment.”

- Step 3. Faculty improve their instruction based on the assessment.

That was the idea. But it hasn’t worked out that way. Not even close.

In 2001, Peter Ewell, a leader in the student-learning-outcome movement, reported that there had been progress toward the reformers’ goal: most accreditors had included at least some mention of “student learning” in the standards they used to judge colleges. In a paper commissioned by accreditors, he urged them to be “more aggressive and creative in requiring evidence of student learning outcomes as an integral part of their standards and processes for review.”14 In 2006, Secretary Spellings took up the charge, and accreditors pledged to focus more on outcomes, as Ewell had recommended. They went along because it was hard to oppose something that seemed, on the surface, to be so reasonable. What could go wrong?15

Step 1 turned out to be a bad starting place. The supposed outcomes of higher education became embodied in lists of topics that a course covers, with verbs added to index what students will “be able to do.” Universities across the country created their lists of SLOs, and they tend to look pretty similar. Gonzaga University actually has a manual for how to write SLOs, and it includes this example:

General Psychology: Students who complete this course will be able to:

- Identify and define basic terms and concepts which are needed for advanced courses in psychology

- Outline the scientific method as it is used by psychologists

- Apply the principles of psychology to practical problems

- Compare and contrast the multiple determinants of behavior (environmental, biological, and genetic)16

As a list of topics, these are not objectionable. But Ewell’s original concept had been that the SLOs would indicate “the particular levels of knowledge, skills, and abilities that a student has attained at the end (or as a result) of his or her engagement in a particular set of collegiate experiences.” The example from Gonzaga clearly does not. The Gonzaga list could be for a high school course, or an undergraduate course, or even a graduate school course.

In 2014, Ewell and others released a compendium of model SLO-blurbs that claims to provide “a demarcation of increasing levels of challenge as a student progresses” from the two-year degree to the bachelor’s and then master’s degree.17 A demarcation, the authors promise, is something that can actually separate the wheat from the chaff, a “level of proficiency.” The demarcation is all about the verbs, says one of the model’s authors. Adapted from a controversial verb hierarchy known as Bloom’s taxonomy, the verbs are “the center, fulcrum, engine of a learning outcome statement . . . corresponding to cognitive activities in which students engage and faculty seek to elicit.”18

What do these magical demarcating SLO-blurb models look like? Here are examples from each level of Civic and Global Learning: At the associate level, the student “Describes diverse positions, historical and contemporary, on selected democratic values or practices, and presents his or her own position on a specific problem where one or more of these values or practices are involved.” At the bachelor’s degree level, the student “Develops and justifies a position on a public issue and relates this position to alternate views held by the public or within the policy environment.” At the master’s level, the student “Assesses and develops a position on a public policy question with significance in the field of study, taking into account both scholarship and published or electronically posted positions and narratives of relevant interest groups.”19

Contrary to what is advertised—that the model defines “what college graduates should know and be able to do”—the blurbs and the verbs actually tell us next to nothing about skills, or knowledge, or anything.20 Yet the blurbs have become objects of reverence, with systems of assessment and data-tracking built up around them. The SLO effort has become a bureaucracy without benefit, such that even faculty members who agreed with the goal of the effort believe it has gotten out of hand. The statewide faculty senate of California’s community colleges, for example, found that while the SLO effort was well-intended, in practice it has led to “contention, frustration, and divisiveness at many colleges.”21

A common product used by campuses across the country to track their SLOs is a database management system called TracDat. Faculty members can plug each SLO-blurb into the database, identify the assessment that is connected with the blurb, and then self-report the proportion of students who supposedly achieved the blurb.22 The resulting database is useless, because the SLO-blurbs do not signify anything. But the colleges can say that they are keeping track of learning as demanded by the accreditor.

There are countless examples of colleges that have been coerced down the SLO path. After the accreditor for Pima Community College in Arizona complained that the faculty was inadequately involved in the SLO process, the college implemented the TracDat approach and added an Educational Testing Service (ETS) standardized test. The accreditor responded with glowing praise that Pima’s progress report provided “ample evidence of the great progress the College has made with respect to assessment.”23 San Jose State University reported to its accreditor that it was finding “faculty resistance to what is still perceived as bureaucratically imposed workload of dubious value.” To address the problem, the campus adjusted its faculty reward system to force faculty participation, a step the accreditor praised.24

Cerritos College, south of Los Angeles, found a way to assess SLOs without involving faculty at all. The campus surveyed its students to ask them about each blurb. Students were asked whether they agreed with the statement, “I am able to analyze graphs and tables for important information.” If they say they agree or strongly agree, then that is considered evidence that the college’s quantitative reasoning expectations are being achieved.25 In a 2014 visit, the accreditor praised the work that Cerritos College had done:

The college has made much progress in meeting the requirements for establishing and assessing student learning outcomes for all courses. It was found by the team following the review of evidence provided and conducting interviews with faculty and administrators as well as SLO progress charts and timelines, that course-level student learning outcomes have been assessed, analyzed, and evaluated.

The accreditor denied the campus a passing grade, however, because the college had not completed the process of creating SLO-blurbs for all degrees and certificates at the major (rather than the course) level.26

The SLO bandwagon started with the idea that clarity about goals would lead colleges to engage students in rich and meaningful learning. The movement, however, is steering colleges toward the opposite: worthless bean-counting and cataloging exercises that give faculty members every reason to ignore or reject the approach. Yet rather than abandon the failed strategy, many of those on the SLO bandwagon insist that any criticism comes from faculty who just do not want to be held accountable. At an accreditor-sponsored training I attended, designed for campus administrators who are responsible for implementing SLOs, a participant asked about faculty resistance. The accreditor, rather than consider the possibility that the faculty have legitimate objections, dismissed faculty concerns as typical of self-interested instructors. The advice: full steam ahead! When I later complained that legitimate concerns were being dismissed inappropriately, the accreditor told me that participants had given the training high marks in the satisfaction survey, so my concerns were not valid.

So, if the fixation on “learning outcomes” is the wrong way to promote quality learning in college, what would be a better approach?

What Makes College Valuable?

Going to college is a lot of work. If you add up all of the class sessions to attend, assignments to complete, and decisions to make, a student needs to successfully perform something like 3,446 tasks, give or take, to get to graduation. It is a daunting to-do list, and the people who complete it are widely seen—in general—as more employable and better citizens and leaders. Why does college make a difference? Some people see college as just a screening device, sorting people in ways that gives information to employers about who is worth hiring for what roles. Others believe that the college experience itself changes people in ways that make them more valuable as workers, or leaders.27

For those who are in the second camp—believing that students do gain something important in college—it is surprisingly difficult to identify what that something is. The simplistic but wrong answer is that it is all the specific knowledge gained. Anyone who has gone to college knows this is wrong—simply looking back at an undergraduate transcript at the courses taken would be enough to trigger that we remember very little about the books we read, or the formulas we applied in problem sets. The information was in our brains at the time, but other than a few skills that carried through to a graduate degree or a job, and maybe some random bits of knowledge that stuck, the details of what we learned are mostly a muddle. Quite simply, the knowledge gained did not stick. Anyone looking to pass the tests or get a passing grade on the papers today would need to take the classes and do the readings all over again.

College leaders say that the something that graduates gain from college is “critical-thinking skills.” But if you look at the Proficiency Profile—a test from the ETS that supposedly measures critical thinking skills—it looks like the same old SAT: grammar, reading comprehension, and algebra. Where are the critical thinking skills in remembering long-taught word meanings, or algebraic formulas? Being able to recognize a grammatically incorrect sentence is useful, to be sure, but it is hardly the excellence we want colleges to be aiming for in graduates. And if the ETS version of critical thinking is the answer to why college itself is important, why do some colleges offer separate courses in critical thinking—is it merely more test-prep classes? Indeed, ETS itself advertises its exam as a way that colleges can “demonstrate program effectiveness for accreditation and funding purposes.”28

Another test, the Collegiate Learning Assessment (CLA), takes a somewhat more sophisticated, dynamic approach to measuring what its sponsor says are critical-thinking skills. The bulk of the ninety-minute exam involves students using provided reference materials—technical reports, data tables, news articles, and memos—to write an essay that makes a recommendation or solves a presented problem. Independent readers score the essay based on the logic and analysis of the argument and the effectiveness of the writing (including grammar). The sixty-minute essay and twenty-five multiple-choice questions yield scores that range from 400 to 1600.29

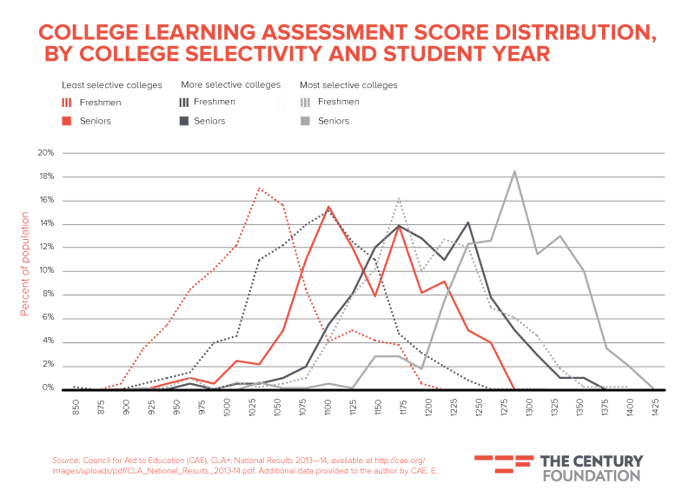

The results of the CLA, administered to 31,652 students at 169 institutions in 2013–14, show that seniors actually do perform better than freshmen, at all types of colleges. But the CLA scores also show something else: a big difference between the scores of students at highly selective schools and those of students at less-selective four-year colleges. The freshmen at schools such as UCLA already have the CLA-measured skills of the seniors at a moderately selective college such as, say, Michigan State. The freshmen at moderately selective colleges, meanwhile, had scores similar to seniors at the least selective colleges.30 (See Figure 1.)

Figure 1.

Imagine lawmakers who, focused on outcomes, want to require a particular CLA score for graduates to get their degrees. What score should they pick? At 1000, most seniors would pass, but so would most freshmen. At 1200, many of the freshmen at the most selective colleges would be eligible for a degree when they walked in the door, while most of the seniors at less-selective colleges would be denied a credential. What might be more interesting is measuring distance traveled: How much does a student have to improve over the time spent in college? While perhaps a more accurate measure, there is still a high degree of fluctuation in scores from school to school, particularly along the selectivity spectrum: the difference between the average freshman score and the average senior score is 106 for the least-selective colleges, 87 for the more-selective ones, and 63 for the most-selective schools.31

Testing a few skills does not come close to capturing the value that college can offer. In a recent essay, researchers Todd Rose and Ogi Ogas argue that critical thinking is not one thing, but is different in every discipline and situation, and also depends on “the particular jaggedness of a thinker’s mind.” They suggest that “instead of settling for the illusion that we can somehow compel all minds toward some standard liberal-arts education, we should grant all students the freedom to develop the particular form of liberal-arts education that they need for their own pathway.”32 In college people do gain “knowledge” and they gain “skills.” But any accountability system that attempts to measure and compare the outcomes will be severely lacking, because each student’s advancement is a unique result of their approach to their 3,446 tasks, a diversity that is part of what makes higher education valuable to them and to society.

All those discussions and problem sets and papers give students experience grappling with lots of different kinds of problems—human, artistic, mathematical, scientific—in various ways, dealing with a wide variety of instructors who have their own idiosyncrasies, preparing students to better manage all kinds of people and complications later in life. Students may or may not have mastered calculus or rhetoric or computer science by graduation, but they have acquired the ability to function in society and in a workplace more confidently and more successfully, because those thousands of tasks in college have helped make them more inquisitive, adaptable, creative, and resourceful—in their own way.

What Gets Students Through to Graduation?

College, when it works most reliably, is a complex system of designed norms and nudges that make activities such as going to class, studying, writing papers, and interacting with peers a natural, almost automatic part of each day. Students spend most of their time on or near campus, where those around them have similar goals or are supporting them in their goals. Their diversions from classwork—whether work, play, or politics—bind them closer to the school and to their peers rather than taking them away, helping them to feel like a valued and engaged part of a community. (It is worth noting that college comes from the same word root as colleague.) The environment, in effect, operates in a way that gets students to stay with the program, which consists of thousands of academic exercises that are designed and implemented by the faculty as part of the plan.

To be effective, academic exercises—the instructor-designed activities and assignments—must take into consideration the skills and knowledge of the particular students, so that the students are intrigued and challenged in ways that demand significant time and effort, but are not overwhelmed or lost. Good teachers scan and test for signs that students are confused or do not know where to start, and they bolster or recalibrate lectures, discussions, and assignments as needed. The student work is sometimes individual, sometimes group, and frequently creative and interactive: writing, reacting, rewriting, designing, and dissecting. Teaching, in a campus environment that supports it, keeps students plugging away on those 3,446 tasks on their way toward graduation.

The supportive environment (including the social, psychological, and financial elements that will be addressed by other reports in this series), however, is not enough to keep students hanging around. Studying, prompted by quality teaching, is critical. In research involving tens of thousands of students and accounting for dozens of other possible explanatory factors, including pre-college academic preparation and socioeconomic status, professor of education Alexander Astin and his team found that “the most basic form of academic involvement—studying and doing homework—has stronger and more widespread positive effects [on student outcomes] than almost any other involvement measure or environmental measure.”33 Of the fifty-seven student activities that Astin measured, the ones most associated with increased graduation revolved around the in-class experience: homework, going to class, working on an independent research project, giving class presentations, interacting with faculty, and taking essay exams (but not multiple-choice tests).

The other activities in Astin’s research that correlated with graduation were ones that connect students more to their peers and to the college: participation in internship programs, in volunteer work, or in intramural sports. Working on campus (part time) was a positive, but working off campus (full or part time) acted as a negative. (Astin’s findings regarding alcohol consumption were interesting. More drinking was associated with higher rates of graduation, perhaps because alcohol plays a role in reducing social inhibitions and helping students to feel a part of a community. But alcohol consumption was also associated with lower grades, pointing to the ongoing campus challenge of tolerating some drinking, but not too much.)

The involvement of students in rich and meaningful educational activities is what keeps students making progress toward the degree, and it is what produces the outcomes that we associate with a college degree. But trying to distill the infinitely varied outcomes down to a list or a test, for accountability purposes, is a formula that, rather than improving education, more likely undermines the quality of the educational activities themselves. To improve college teaching, we need a different approach.

Addressing the Problems in College Teaching

All of the components of a college program—especially quality teaching—are important to students reaching the degree, but without quality teaching the degree is not worth reaching. Unfortunately, the incentive systems in higher education often make it difficult to promote the inspired instruction, discussions, and creative, personalized projects that prompt students to study and ultimately graduate. In Our Underachieving Colleges, Derek Bok laid out his concern—after serving as president of Harvard, no less—that faculty are not adequately attentive to student learning. (He is not the first to raise the issue—it has been a complaint for decades, or maybe centuries.) The result, he said, is that “Many seniors graduate without being able to write well enough to satisfy their employers. Many cannot reason clearly or perform competently in analyzing complex, non-technical problems, even though faculties rank critical thinking as the primary goal of a college education.”34 While Bok blamed professors, other commentators blame low standards on students who want to party and who give low ratings to professors who demand more,35 while still others try for balance by asserting that there is a conspiracy between students and faculty, with each having low expectations of the other: “faculty pretend to teach, students pretend to study, and as long as parents and others paying the bills are oblivious, everyone is happy.”36

Maybe the blame should be shared, but in the end, it does not matter. The main problem is that most of the incentives do tend to steer everyone in the wrong direction. Professors have little reason, other than their own integrity, to push back against students’ low expectations. Expecting more out of students just means seeing more students during office hours, receiving more grade appeals, and creating tests and papers that are much harder to grade because they are about thinking and the application of concepts, rather than the recall of facts. “We know students learn more when expectations are high and when feedback on what they need to do to improve is constant,” says William Tierney, a professor of education at the University of Southern California. “[Students] would work harder if we expected it of them—but we don’t. . . [T]he incentives for engagement between student and faculty are few.”37

Bok, in his book, was not optimistic about the potential for improvement: “The weaknesses of undergraduate education may be real, but they serve important faculty interests. Like most human beings, professors do not relish having their work evaluated by others. . . . Nor do instructors who are used to lecturing welcome research on new pedagogies that may put pressure on them to change the way they teach.” But he did offer one clue about a possible way forward: “investigations performed on one’s own campus,” rather than somewhere else, have the power “to persuade faculty members that the findings are relevant to their college and their students.” Evidence about their own institution is harder to ignore than are general calls for improved learning, or alarm bells about college graduates in general.

Bok’s notion—shining a light on the evidence of students’ academic engagement on their own campuses—is the reform that could make a real difference in teaching, and therefore graduation rates as well. The evidence of excellent or inadequate student engagement is student work: the papers, written exams, presentations and projects from students’ 3,446 tasks to a college degree. Unearthing those artifacts is the key to changing the incentive system in higher education so that excellent teaching—including the college environment and supports that make it possible—is valued and encouraged.

How to Get Better Learning and Higher Graduation Rates

Sometimes, college faculties do contain poor teachers who are shirking their responsibilities. The best strategy to prevent bad teaching, however, is not to focus on the output of this flawed process, but rather to look at the educational process itself. Shining a light on the work that students do in their classes would provide the “telling evidence” that Bok said would make a difference.

In my recent review of accreditation documents, I saw some evidence of that strategy. For example, at Whittier College, a liberal arts college near Los Angeles, portfolios of student work are used in the process of reviewing majors and programs on a rolling basis. External experts are involved in the process.38 At Cal Poly San Luis Obispo, random samples of student essays were collected as part of an effort to improve teaching and learning, and its rolling review of majors also includes extensive use of external reviewers39 University of California–Berkeley recently revised its process for reviewing majors, now requiring them to involve external experts, with specific guidelines to protect against conflicts of interest. Reviewing samples of student work is strongly recommended under the policy.40

The benefits of starting with the student work as the unit of analysis is that it respects the unlimited variety of ways that colleges, instructors and students alike, arriving with different skill levels, engage in the curriculum. Rather than demanding SLOs or a standardized test, the focus of accountability efforts should be on the evidence of student engagement: the work students do in the form of papers, written exams, presentations, and projects.

If the Obama administration wants to promote better outcomes in higher education, it should start by building on these positive examples of efforts to shine a light on the evidence of students’ academic engagement. Validating colleges’ own quality-assurance systems should become the core of what accreditors do if they want to serve as a gateway to federal funds. Think of it as an outside audit of the university’s academic accounting system. With this approach, colleges are responsible for establishing their own systems for the occasional review of their majors and courses by outside experts they identify. Accreditors, meanwhile, have the responsibility of auditing those campus review processes, to make sure that they are comprehensive and valid, involving truly independent outsiders and the examination of student work. Both the reviews and the audits should include elements of random selection, so that any student or major could be selected. This approach makes it less likely, for example, that a diploma-mill situation like the one involving football players at the University of North Carolina could exist for long without detection.41

Second, the Department of Education should tell accreditors to take a fresh look at whether they are properly implementing the new “credit hour” definition that was adopted in 2010. The credit hour, the quantity measure of higher education (the units assigned to a class), has been widely scorned as promoting a “butts in seats” approach to college that involves sitting passively in lecture classes.42 The 2010 action changed that, so that now a credit is no longer a time gauge but instead a measure of “an amount of work . . . verified by evidence of student achievement.”43 For federal aid purposes, a credit hour is now student work, the evidence of students’ achievements.

Some accreditors, however, have not made the shift in monitoring the credit hour and are still allowing an approach that just counts course schedules rather than student engagement. For example, the excerpt in Figure 2 is from a form currently used by one accreditor to check on whether a college is complying with the federal credit-hour standard.44

Figure 2. Credit-Hour Monitoring Form (excerpt)

Notice that in the top section, for a ground (brick and mortar) campus, the reviewer is only required to check to see if the butts-in-seats time matches the credit hours that are assigned to the course. There is absolutely no expectation that the reviewer will check to see whether students had to do anything other than sit there pass the course. In contrast, for an online course—the bottom section—there is, at least, an assessment of whether the “amount of work” is appropriate given the credit being awarded. The online courses, in that sense, are held to a higher standard than are ground courses. Except that the analysis involves only a course syllabus, which is like judging a restaurant by the menu. A syllabus is not enough to determine what amount and level of student work is expected of students: only a review of a sampling of actual student work can accomplish that.

The Department of Education therefore should work with accreditors and colleges to ensure that their understanding of the credit hour requirement is accurate and up-to-date. It is not enough for students to sit in lectures for twelve hours a week for them to qualify for a “full time” chunk of financial aid from taxpayers. It is important to remember, however, that the amount and quality of student work for a given number of units will vary by college, because the skills and background of enrolled students will vary from school to school, as will the types of learning experiences in different disciplines. The benefit of a locally determined and accreditor-checked approach is that it allows for the consideration of whether the expectations are appropriate given the students who are enrolled. Standardized tests, for the same reason, usually are not a good way to determine college quality. College is about advancing students from where they start, not reaching a score and stopping; student work is the evidence of that ongoing advancement.

Are these two steps enough? I am concerned that even with these changes, the system would still be vulnerable to becoming an insular system of mutual praise. One way to prevent that from occurring would be to select samples of student work from each campus and subject them to a separate, independent review—or even, perhaps, make them public (without students’ identities). The organization that runs the International Baccalaureate program in high schools uses that type of system, choosing random students and having the schools send their papers and written exams to check on each school’s rigor. Such a system could be implemented among colleges, provided an entity—either a section of the Department of Education or an independent, outside body—was authorized to initiate and refine it.

Conclusion

Too often, policy discussions about college completion or degree attainment treat the question of quality—the actual teaching and learning—as an afterthought or as a footnote. Attention to graduation, per se, is misplaced and dangerous for two reasons. First, obsessing about graduation can lead colleges to avoid enrolling students whose family situations or academic background are less solid, undermining access for the populations that could most benefit from higher education. Second, colleges—even high-quality, selective ones—worried about graduation rates can too easily become little better than diploma mills, expecting little from students rather than addressing the teaching and supports that could help students excel. Standardized tests can be a damaging and counterproductive way to protect against that danger.

Imposing standardized tests or large, bureaucratic assessment-tracking mechanisms is also counter-productive, deadening the curriculum, wasting the time of faculty, and leading students to wonder why they are bothering with school.

A student-work approach serves as a check on whether colleges are engaging students in projects that intrigue and challenge them, a function of both the learning experiences that instructors design, and the campus supports, services and environment that help students focus on school and stay on track through those 3,446 tasks. An accountability approach that starts with the artifacts of student engagement stands the best chance of prompting institutional redesigns that will increase low-income students’ likelihood of graduation, with a high-quality degree.45

This paper is part one in a series on College Completion supported by Pearson and published by The Century Foundation.

Notes

-

1. U.S. Census Bureau, Census Atlas of the United States (Washington, D.C.: Government Printing Office, 2007), Chapter 10, https://www.census.gov/population/www/cen2000/censusatlas/pdf/10_Education.pdf.

2. In 2015–16, the U.S. Department of Education, National Center for Education Statistics, projects that 1,847,000 people will be awarded bachelor’s degrees. Degrees Conferred Projection Model, 1980–81 through 2024–25, prepared April 2015, https://nces.ed.gov/programs/digest/d14/tables/dt14_318.10.asp?current=yes.

3. Of those who were high school sophomores in 2002, 14 percent and 60 percent of those in the bottom and top quartile, respectively, had a bachelor’s degree by 2012. National Center for Education Statistics, “Postsecondary Attainment: Differences by Socioeconomic Status,” May 2015, http://nces.ed.gov/programs/coe/indicator_tva.asp.

4. For U.S. adults age 25 and above. Derived from U.S. Census Bureau, Current Population Survey, 2014 Annual Social and Economic Supplement, “Table 3. Detailed Years of School Completed by People 25 Years and Over by Sex, Age Groups, Race and Hispanic Origin: 2014,” http://www.census.gov/hhes/socdemo/education/data/cps/2014/tables.html.

5. Higher Education for American Democracy: A Report of The President’s Commission on Higher Education, December 11, 1947, 36, http://hdl.handle.net/2027/mdp.39015001995664.

6. Derived by the author from U.S. Census Bureau, Current Population Survey, 2014 Annual Social and Economic Supplement.

7. The White House, “The President’s Plan for A Strong Middle Class & a Strong America,” February 12, 2013, https://www.whitehouse.gov/sites/default/files/uploads/sotu_2013_blueprint_embargo.pdf.

8. Marco Rubio, “Reform Higher-Ed for the 21st Century,” National Review, October 1, 2015, http://www.nationalreview.com/article/424871/reform-higher-ed-accreditation-21st-century-marco-rubio.

9. “Trust Busting Higher Ed,” Wall Street Journal, October 4, 2015, http://www.wsj.com/articles/trust-busting-higher-education-1443997741.

10. U.S. Department of Education, “Department of Education Advances Transparency Agenda for Accreditation,” Press Release, November 6, 2015, http://www.ed.gov/news/press-releases/department-education-advances-transparency-agenda-accreditation.

11. Ted Mitchell, “Strengthening Accreditation’s Focus on Outcomes,” Under Secretary’s Blog, U.S. Department of Education, posted February 4, 2016, http://sites.ed.gov/ous/2016/02/strengthening-accreditations-focus-on-outcomes/.

12. Doug Lederman, “Explaining the Accreditation Debate,” InsideHigherEd, March 29, 2007, https://www.insidehighered.com/news/2007/03/29/accredit.

13. Frank Newman, Lara Couturier and Jamie Scurry, The Future of Higher Education: Rhetoric, Reality, and the Risks of the Market (San Francisco: Jossey-Bass, 2004), 139.

14. Peter Ewell, “Accreditation and Student Learning Outcomes: A Proposed Point of Departure,” Council for Higher Education Accreditation, 2001, http://www.chea.org/pdf/EwellSLO_Sept2001.pdf.

15. Doug Lederman, “Explaining the Accreditation Debate,” InsideHigherEd, March 29, 2007, https://www.insidehighered.com/news/2007/03/29/accredit.

16. Gonzaga University, “How to Write Course Learning Outcomes for Your Syllabus,” http://www.gonzaga.edu/campus-resources/offices-and-services-a-z/academic-vice-president/CTA/LEAD/HowToWriteCourseLearningOutcomesforYourSyllabus.pdf.

17.Lumina Foundation, The Degree Qualifications Profile (Indianapolis, Ind: Lumina Foundation2014), http://degreeprofile.org/press_four/wp-content/uploads/2014/09/DQP-web-download.pdf.

18. Clifford Adelman, “To Imagine a Verb: The Language and Syntax of Learning Outcome Statements,” National Institute for Learning Outcomes Assessment, February 2015, http://learningoutcomesassessment.org/documents/Occasional_Paper_24.pdf.

19. “Degree Qualifications Profile,” Lumina Foundation, http://degreeprofile.org/read-the-dqp/the-degree-qualifications-profile/civic-and-global-learning/.

20. For a brief moment, the authors admit this, saying—emphasis is theirs—“The proficiency statements do not prescribe how well a student must demonstrate proficiency.” The only way to judge quality, they say, is by seeing what instructors consider to be student work worthy of a passing grade. Ibid., 13.

21. The Academic Senate for California Community Colleges, “Guiding Principles for SLO Assessment,” adopted fall 2010, at http://www.merritt.edu/wp/slo/wp-content/uploads/sites/296/2014/02/Guiding-Principles-for-SLO-Assessment.pdf.

22. See, for example, the TracDat guide for Purdue University, at https://www.purdue.edu/assessment/PDF/Academic_Purdue%20TracDat_Training_Manual.pdf. An example of a completed database, in which Saddleback College faculty have compiled data on 2,964 SLOs, can be found at http://www.saddleback.edu/epa/student-learning-outcomes-and-administrative-unit-outcomes.

23. Higher Learning Commission, letter to Chancellor Suzanne Laura Miles, January 28, 2013, https://www.pima.edu/about-pima/accreditation/docs/HLC-staff-analysis.pdf.

24. SJSU Interim Report to WASC, November 8, 2010 (quote on final page of text—no page numbers), and letter from WASC, dated February 2, 2011.

25. Cerritos College, General Education Competency: Quantitative Reasoning Assessment Report, 2011–12, http://cms.cerritos.edu/uploads/SLO/GE_Quantitative_Reasoning_Assessment_Report.pdf.

26. ACCJC, External Evaluation Report, Cerritos Community College, March 3–6, 2014.

27. Joseph E. Stiglitz, “The Theory of ‘Screening,’ Education, and the Distribution of Income,” American Economic Review 65, no. 3 (1975): 283–300.

28. ETS Proficiency Profile webpage, https://www.ets.org/proficiencyprofile/about/. Sample test items at https://www.ets.org/s/proficiencyprofile/pdf/sampleques.pdf.

29. See the scoring rubric at http://cae.org/images/uploads/pdf/CLA_Plus_Scoring_Rubric.pdf.

30. Council for Aid to Education (CAE), CLA+: National Results 2013—14, http://cae.org/images/uploads/pdf/CLA_National_Results_2013-14.pdf. Additional data provided to the author by CAE.

31. Ibid., Table 2.

32. Todd Rose and Ogi Ogas, “The Faulty Foundation of American Colleges,” Chronicle Review (Chronicle of Higher Education), January 22, 2016, B6.

33. Alexander Astin, What Matters in College: Four Critical Years Revisited (San Francisco: Jossey-Bass, 1993).

34. Derek Bok, Our Underacheiving Colleges A Candid Look at How Much Students Learn and Why They Should Be Learning More (Princeton, N.J.: Princeton University Press, 2007, new edition).

35. Craig Brandon, The Five-Year Party (Dallas, Tx.: Benbella Books, 2010), 52 and 74.

36. Christopher Winship, “The Faculty-Student Low-Low Contract,” Springer Science+Business Media, 2011, http://www.springerlink.com/content/blnxu23738062374/fulltext.pdf.

37. William G. Tierney, “Creating a Meaningful College Experience in an Era of Streamlining,” Chronicle of Higher Education, June 17, 2011.

38. Report of the WASC Visiting Team, Educational Excellence Review, To Whittier College, visit date March 12–15, 2013.

39. Report of the WASC Visiting Team, Educational Excellence Review, To California Polytechnic University San Luis Obispo, April 2–5, 2012.

40. “Academic Program Review: UC Berkeley Guide for the Review of Existing Instructional Programs,” as revised June 2015, http://vpsafp.berkeley.edu/media/GUIDE_June2015.pdf.

41. Kenneth L. Wainstein, A. Joseph Jay III, and Colleen Depman Kukowski, “Investigation of Irregular Classes in the Department of African and Afro-American Studies at the University of North Carolina at Chapel Hill,” Cadwalader, October 16, 2014, http://www.wralsportsfan.com/asset/colleges/unc/2014/10/22/14104501/148975-UNC-FINALREPORT.pdf.

42. Amy Laitinen, “Cracking the Credit Hour,” New America Foundation, September 5, 2012, https://www.newamerica.org/education-policy/cracking-the-credit-hour/.

43. See the discussion about, and a link to, the credit hour rule at “Program Integrity Questions and Answers–Credit Hour,” U.S. Department of Higher Education, http://www2.ed.gov/policy/highered/reg/hearulemaking/2009/credit.html.

44. WASC Senior College and University Commission, “Federal Compliance Forms: Credit Hour and Program Review Length Form,” http://www.wascsenior.org/credit-hour-and-program-length-review-form.

45. For a discussion of institutional redesign, see Alicia C. Dowd and Estela Mara Bensimon, Engaging the “Race Question:” Accountability and Equity in U.S. Higher Education (New York: Teachers College Press, 2015).