Almost two-thirds of students who enter community colleges every year are judged to be academically not ready to engage in college-level coursework.1 In order to enroll, these students typically must take one or more “remedial” or “developmental” math or English courses2 that will not count toward their college degree. The students most likely to be referred to these courses are the low-income and minority students for whom a college degree could change the trajectory of their lives, and address the nation’s appalling disparities in educational attainment by income and race.

The bulk of the evidence, however, suggests that the $4 billion annual investment in services to help underprepared students is having little positive impact on the success of those students in community colleges.3 In this report, we review that research, describe findings from studies on four types of reforms under way at various colleges, and conclude with our view that a wholesale redesign of the student experience at community colleges is needed to make a real difference in the outcomes of underprepared students.4

Traditional Remedial Education Is Not Working

Services for underprepared students aim to strengthen students’ academic skills to the point that they can be successful in their college-level studies. To assess these programs’ effectiveness, a variety of recent studies have examined the extent to which remedial coursework helps underprepared students to successfully complete introductory college-level courses—typically, the first levels of English composition and college-level mathematics—within a given span of time, such as one or two years after entry. Using comparison groups of similar students who did not undergo remediation, rigorous statistical analyses suggest that remediation does not improve long-term student outcomes.5 That is, among students who test barely below “college ready,” taking one remedial course typically will not increase their likelihood of long-term progression and success (see Figure 1).

| Figure 1. Overview of Findings on Outcomes for Developmental Students | |||||||

| Developmental Math Students | |||||||

| Study | Level | Short-Term Impacts | Medium-& Long-Term Impacts | ||||

| Persistence | Passed College-Level Subject | Grade in College-Level Subject | Persistence | College-Level Credits Earned | Credential and/or Transfer | ||

| Tennessee | Upper | Neg | Null (conditional) | Null | Null (conditional) | Neg (credential) | |

| Texas | Upper | Null | Null | ||||

| Ohio | Upper | Null | POS (transfer) | ||||

| Luccs | Upper | Neg | Neg | Null | Null | Null | |

| Florida | Upper | Null | Null | Null | Null | ||

| Virginia 1 | Lower vs. Middle | Null | Neg (credential | ||||

| Tennessee | Lower vs. Middle | Null | Null (conditional) | Null | Null (conditional) | POS (credential) | |

| Developmental Reading Students | |||||||

| Tennessee | Upper | Pos | Null (conditional) | Null | Null (conditional) | Null (credential) | |

| Texas | Upper | Null | Null | ||||

| Ohio | Upper | Null | Null | ||||

| LUCCS | Upper | Neg | Neg | Neg | Neg | Neg (credential) | |

| Florida | Upper | Null | Neg | Null | Null | ||

| Virginia 2 | Upper | Null | Null (conditional) | Null | Neg | ||

| Virginia 2 | Lower vs. Upper | Neg | Null (conditional) | Neg | Neg | ||

| Tennessee | Lower vs. Middle | Null | Null (conditional) | Pos | POS (conditional) | Null (credential) | |

| Developmental Writing Students | |||||||

| Tennessee | Upper | Neg | Null (conditional) | Null | Neg (conditional) | Neg (credential) | |

| Virginia 2 | Upper | Null | Null (conditional) | Null | Null | ||

| LUCCS | Writing & Reading vs. Reading Only | Null | Null | Null | Null | Null | |

| Virginia 2 | Lower vs. Upper | Neg | Null (conditional) | Neg | Null | ||

| Tennessee | Lower vs. Upper | Pos | POS (conditional) | Null | Null (conditional) | Null (credential) | |

| Note: “Conditional” signifies that only outcomes for students who enrolled in college-level courses, or persisted in college, were compared. LUCCS stands for large urban community college system.

Source: “What We Know about Developmental Education Outcomes,” CCRC, Teachers College, Columbia University, January 2014, 3, http://ccrc.tc.columbia.edu/media/k2/attachments/what-we-know-about-developmental-education-outcomes.pdf. |

|||||||

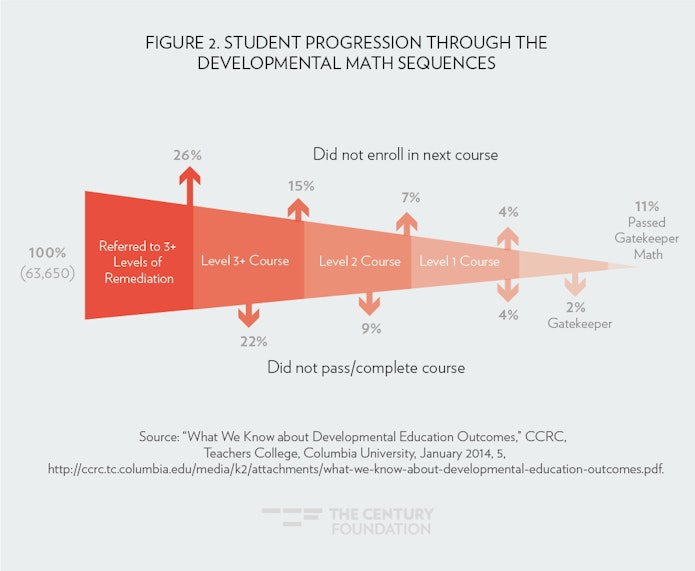

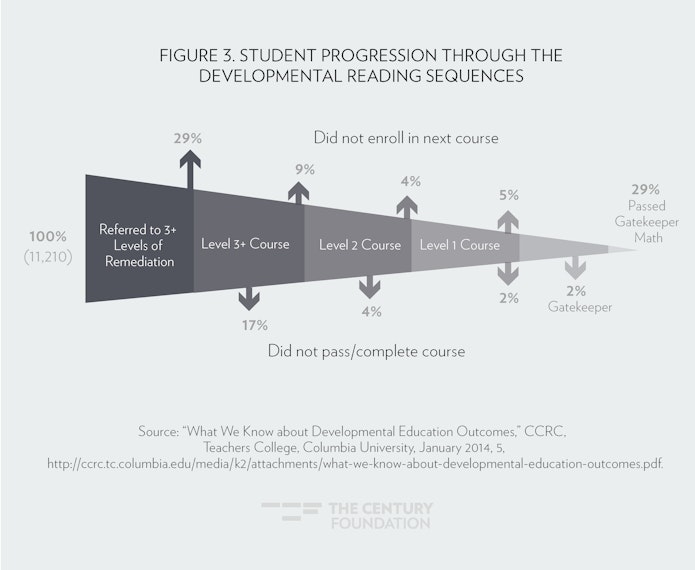

Among students who test much more poorly—who are often referred to two or three remedial courses in each subject area—the likelihood is quite low that they will ever complete a college-level course in that subject area. For example, in one sample, among students who are referred to three remedial math courses, only 11 percent successfully complete college-level math within three years.6 (See Figure 2 and 3.)

We have identified several reasons the traditional system of services for underprepared students has little impact on students who are referred to them. One is that the exams that place students into remedial courses tend to do a poor job of predicting students’ actual likelihood of success in college-level coursework; accordingly, some students who are referred to remedial education in fact do not need it.7 For these students, the time required to complete remedial coursework will set them behind their peers without conferring additional academic benefits. Second, a lengthy remedial sequence tends to eat away at financial aid and students’ own finances, encouraging students to drop out before they ever reach college-level courses. For example, the majority of students who do not complete their remedial sequence do not actually fail or withdraw from a remedial course; instead, they simply do not show up for their first course, or drop out between courses.8

Another problem is that remedial education can fail to help students with their specific needs. For example, students who complete remedial courses may have learned more than necessary about some skills—such as diagramming sentences or factoring quadratic equations—while lacking other skills foundational to success in college-level math, English, and other disciplinary courses such as history and biology.9 Further, outside of weaknesses in specific reading, writing, and math skills, many underprepared students struggle due to weaknesses in an array of other skills such as help-seeking behavior and time-management, which go undiagnosed and unaddressed within the traditional system.10

Finally, the instructional approaches within traditional remedial courses can also be a barrier for students. In many such courses, instructors emphasize drill and practice on specific skills, without connecting those skills to interesting and relevant college-level tasks.11

When all of these factors are taken together, it is not surprising that traditional remedial education is not effective in helping underprepared students rise to and succeed in college-level work.

When all of these factors are taken together, it is not surprising that traditional remedial education is not effective in helping underprepared students rise to and succeed in college-level work. To address these problems, colleges and some statewide systems are experimenting with new forms of assessment, program organization, curriculum, and instruction. We describe these developments below, before moving on to the idea of integrating the reform of remedial education into broader and more comprehensive changes at community colleges.

Figuring Out Who Needs Remedial Help

Research has found that standardized multiple-choice math and English placement exams do not do a good job identifying which students do and do not need help, resulting in the misplacement of many of them into either remedial or college-level coursework. This has led to a widespread movement to reform assessment and placement practices at colleges. Indeed, skepticism about the value of traditional placement exams led the testing company ACT to announce that it would terminate its placement product (COMPASS) in 2016. To replace the traditional approach, some colleges and systems have created customized tests that are more closely aligned with their own college-level programs, including tests with different standards of readiness in different disciplines.12 To ensure as much accuracy in individual students’ test results as possible, many colleges are also providing students with more explicit information about the exams and providing them with test-prep workshops or online tutorials.13

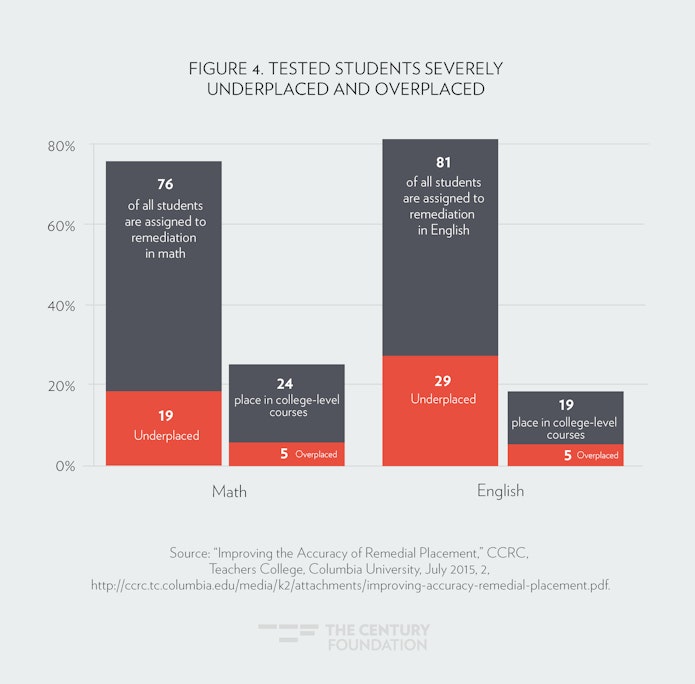

Students are more likely to be “under-placed” in remedial coursework (they are assigned to these courses when they could be successful in the relevant college level course), rather than “over-placed” in college-level coursework (they are directly assigned to college level courses but fail those courses).14 (See Figure 4.)

This suggests that exam cutoff scores for college-level coursework tend to be too high. Given the prevalence of under-placement, some colleges using traditional placement exams have also recently lowered their cutoff scores. While tentative, research suggests that lowering too-high cutoffs will allow many more students to enroll in introductory college-level math and English courses, and only slightly decrease those courses’ pass rates—with the net effect of allowing substantially more students to complete college-level math and English.15 (See Figure 5.)

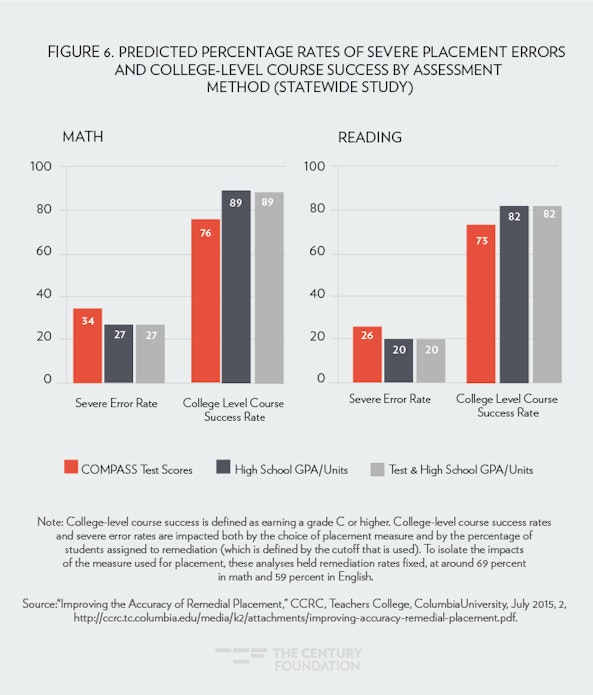

Increasingly, colleges are also experimenting with approaches that supplement placement exam scores with other indicators of student readiness. While many colleges are interested in understanding students’ broader non-cognitive abilities such as motivation or “grit,” most are focusing on high school academic records, for two reasons. First, high school performance indicators such as overall GPA, math course-taking and GPA, or English course-taking and GPA, are concrete to measure and relatively easy to gather. And second, research suggests that adding GPA as a multiple measure will help reduce placement error rates, in large part because GPA helps to capture non-cognitive attributes such as academic motivation.16 (See Figure 6.)

More specific measures of non-cognitive readiness may also have some predictive power over and above GPA. For example, in a pilot study of the Educational Testing Service’s Success Navigator™ non-cognitive test, which used data from over 4,000 students at seven colleges, researchers found that placement exam scores alone explained approximately 8 percent of students’ performance in their first college-level English course; adding high school GPA explained an additional 6 percent, and adding Success Navigator scores explained yet another 3 percent (parallel numbers for math were 2 percent, 7 percent, and 2 percent).17

The most developed experience with assessment reform thus far has taken place at Long Beach City College. The college began studying the usefulness of student high school transcript data in 2011, and found that students’ performance in high school courses was a far better predictor of college performance than were placement test scores. Long Beach developed a multiple-measures algorithm that increased college-level placement from 15 percent to almost 60 percent in English, and from 10 percent to over 30 percent in math. That is, in working to improve the accuracy of placement, their algorithm also essentially lowered the cutoff into college-level coursework by a substantial amount. In fall 2012, Long Beach students who were placed into college-level coursework using multiple measures had slightly lower but statistically similar pass rates to those who placed into the same courses based on the exam score alone (62 percent versus 64 percent in English, and 51 percent versus 55 percent in math). Accordingly, the implementation of multiple measures strongly increased the overall proportion of entering Long Beach students who completed college-level math and English, with particularly notable gains for black and Hispanic students.18

Based on Long Beach City College’s experience, many state systems and individual colleges are contemplating a move toward multiple measures. In 2016, North Carolina’s community college system will be the first to adopt GPA as a multiple measure statewide.19 One key question that remains for practitioners, however, is: How much of the Long Beach improvement in course completion is due to the increased proportion of students allowed into college-level coursework, versus how much would results improve if a college held this proportion constant and used multiple measures to change the mixture of students allowed into college-level coursework? We hope to address this question as part of a rigorous random-assignment study of multiple measures we are currently conducting with seven community colleges of the State University of New York.20

As colleges move toward the accelerated course structures for underprepared students described in the next section, their questions regarding readiness assessment are also beginning to change. Rather than asking, “How can we sort students into college-ready or not college-ready?” colleges are now beginning to ask, “How can we determine the most useful course structures and supports to assign to this student?” To appropriately answer this question, colleges will need to more explicitly consider each student’s academic and career goals as part of their readiness assessment. Later in this report, we will discuss that issue in more detail.

Accelerating Remedial Coursework

One indicator that remedial education is not working properly is that the majority of students drop out before they complete the lengthy pre-requisite course sequence to which they were assigned. Accelerated programs are designed to shorten the timeframe of remedial education, thereby providing students with fewer natural exit points, and reducing the likelihood that life events will pull students away from college before they enter college level courses. Many acceleration programs also attempt to teach skills more closely tied to college-level programs, and to approximate college-level expectations. That is, rather than simply repeating the high school curriculum, the accelerated program provides students with a safe space and support for practicing college-level work.

In terms of their course structure, most acceleration models include one or more of the following design elements: paired courses, compressed sequences, or co-requisite support courses. Paired courses combine two sequential developmental courses into a single semester, while maintaining the number of required credit hours. Compressed sequences reduce the number of required credit hours, often by eliminating redundancies or content that is irrelevant to the student’s program of study. In the co-requisite model, students enroll directly in college-level math or English but also enroll in a paired developmental support course. The best-known example of the latter is the Community College of Baltimore County’s Accelerated Learning Program (ALP), which enrolls developmental writing students into college-level English together with an ALP section of developmental writing.21 The ALP students in a given college-level English course section meet together after each class with their college-level English instructor, to work on learning strategies and build the skills required to meet the expectations for their college-level English assignments.

In general, acceleration models improve students’ likelihood of enrolling in and completing college-level math and English, particularly if those models include additional academic supports to help students succeed with the increased pace and challenge of the accelerated curriculum.

In general, acceleration models improve students’ likelihood of enrolling in and completing college-level math and English, particularly if those models include additional academic supports to help students succeed with the increased pace and challenge of the accelerated curriculum.22 Perhaps due to the success and publicity surrounding ALP, the co-requisite model has become increasingly popular across the country.

Tennessee is the state that has moved farthest in implementing and scaling up the co-requisite model, not only in English but also in mathematics. Indeed, the state has been at the forefront of community college reform in general, and as part of a broad strategy to improve college completion initiated by the Complete College Tennessee Act of 2010, the state’s Board of Regents has undertaken an ambitious reform of developmental education.23 As one element of that reform, they implemented a set of pilot programs at ten community colleges beginning in Fall 2014, involving about 1,000 students, in which students across a range of placement test scores (12 to 18 on the ACT) were enrolled into a college-level math course (Algebra II, Math for the Liberal Arts, or Probability and Statistics) while engaging in a required support. While the particular support varied according to the college and type of math course, it was typically a three-credit course that relied heavily on technology-based instruction such as MyMathLab software. According to the state system’s pilot descriptive data,24 only 12 percent of students in the old pre-requisite system (Fall 2012 cohort) completed college-level math and 31 percent completed college level writing within one year; under the pilot co-requisite model, that proportion increased to 51 percent for math and 59 for writing (Fall 2015 cohort). Although these are descriptive results, they were quite encouraging, and the system scaled the co-requisite support model across all of its colleges in the 2015–16 academic year.

The spread of the co-requisite model is perhaps the most significant development in the remediation reform movement in the past two years. According to Complete College America, five state systems are currently implementing the co-requisite model statewide, three are well on their way, and thirteen more have committed to implementing the model.25 Preliminary descriptive results from Colorado, Georgia, Indiana, and West Virginia suggest similarly promising results to those seen in Tennessee.26

Despite enthusiasm for the co-requisite model, there are some students whose skills are so elementary that they will not be successful in college-level courses, even with extensive assistance. However, it is unclear how we can identify those students. Certainly there are some students who score at the lowest levels on placement exams who are nevertheless successful in co-requisite or other acceleration models;27 however, there are many others who are not successful.

In order to address the needs of very low-performing students, many practitioners feel that these students need more intensive and sustained support. One example of this sustained approach is the CUNY Start model at the City University of New York (CUNY), an intensive full-time program designed for students with multiple remedial needs.28 It is a pre-college program, and thus requires students to delay their formal entry into college for one semester. Instead, students enroll in a twelve- to eighteen-week, twenty-five hour per week program, which follows a particular instructional philosophy (discussed later in this report in more detail). The program costs students $75, and does not count against students’ financial aid. While the program began at a small scale with 400 students in 2010–2011, by 2013–2014, enrollment was almost 4,000 across eight CUNY community colleges. One study found that, compared to students in traditional sequences, CUNY Start students were almost twice as likely to graduate within three years, and more than twice as likely to graduate with a GPA of 3.0 or higher within three years.29

Appealing to Students’ Interests

Developmental education is more likely to be effective when it is tied as closely as possible to students’ field of interest. For example, the Integrated Basic Education and Skills Training (I-BEST) program, which originated in Washington State’s community and technical college system, is designed for adult basic education students who have already chosen a career-technical education program. Basic skills teachers team-teach with technical instructors, with the basic skills curriculum tailored to the needs of the occupational program. Our research demonstrates that I-BEST students perform substantially better than their similar peers in terms of earning college-level credits and completing certificate programs.30

Developmental education is more likely to be effective when it is tied as closely as possible to students’ field of interest.

Within associate degree programs, colleges have been reluctant to fully embrace approaches like I-BEST for a variety of practical reasons that we will discuss later. However, many colleges are working to make math curricula and instruction for underprepared students more relevant to students’ goals by creating two or three distinct math pathways. For example, underprepared students interested in science and technical fields might still be required to complete an algebra-intensive developmental program, while students interested in criminal justice might complete a statistics-oriented program, and those interested in humanities might complete a quantitative reasoning program. The California Acceleration Project pre-statistics pathway and the Carnegie Foundation for the Advancement of Teaching’s Quantway/Statway model have recently shown very promising results, and their models are spreading across California and other states.31 In addition, a new variant, the New Mathways Project (NMP), has incubated under The University of Texas at Austin’s Dana Center and is spreading across the state of Texas.32

Typically, math pathways consist of a two-semester sequence that allows students to complete a college-level math course relevant to their program of interest within one year. For example, under Carnegie’s Statway® model, students enroll in a year-long program that replaces the college’s algebra sequence as well as a college-level statistics course. Rigorous analysis of Statway® suggests that these students were three times as likely to complete college-level math in one year than their similar peers were in two years.33

Math pathways are not only relevant to students’ programs, but are also more accelerated than traditional remedial math curricula. While some math pathways require students to demonstrate a certain level of readiness (often, in arithmetic) prior to entry into the pathway, others allow students with any math placement score to enter. For Statway®, subgroup analyses indicated that the program’s benefits were strong not only for students who would normally be placed one level below college-level math, but also for those who would normally be placed two levels below.34 For the California Acceleration Project’s math pathways, quasi-experimental analysis indicated that the program had positive effects for students placed at all levels of the basic skills sequence.35

In general, math pathways also follow specific instructional philosophies designed to help students succeed, an issue that we will discuss in the next section. In addition, the New Mathways Project (NMP) includes a three-credit student success course that helps teach students how to be successful college students.

Outside the development of math pathways, colleges have seemed reluctant to tailor developmental education supports to students’ program of interest. A few colleges have worked to integrate English or quantitative literacy supports into key college-level courses. For example, several community colleges in the Achieving the Dream network are experimenting with Reading Apprenticeship, a program developed in secondary schools in which teachers of biology, history, or other key courses are trained in strategies to support the reading success of students in their courses.36 Random-assignment studies in the secondary school context indicate that Reading Apprenticeship students’ learning gains are substantially stronger (one year beyond the typical gain in learning) in both reading comprehension and subject-specific exams.37 However, the program has not yet been evaluated in the postsecondary context, and without such evidence, many colleges may be reluctant to engage college-level faculty in the in-depth professional development required to teach under the Reading Apprenticeship model.

Other colleges are interested in designing developmental co-requisites that are tailored to students’ fields of interest; however, many students are undecided as to their field of interest, or switch fields after a semester or two. Accordingly, college leaders worry that by tailoring developmental education too closely to the student’s field of interest, the student may have to re-take developmental coursework if he or she switches fields. In order to address the problem of student indecision, some colleges have developed so-called “meta-majors” in broad areas such as health, business, or information technology.38 For example, an entering student may have a general interest in health but know little about options other than nursing; or may have a general interest in business, but be unsure how to choose between accounting and marketing. Within a meta-major, the student can take a core first-semester curriculum that allows exploration of the programs embedded within the meta-major, while completing courses that will apply to any of those programs. While the meta-major concept has become increasingly popular, thus far we have not seen colleges integrate and contextualize developmental education into meta-majors. This could be a next step in connecting remediation to college-level programs of study.

Improving Instruction

Instruction is the core of any developmental education strategy; yet research has paid little attention to instruction within the developmental classroom. One notable exception is Norton Grubb’s study of remedial education teaching in 169 classrooms in twenty California community colleges, which found that most remedial courses have a very similar pedagogical approach:

The approach emphasizes drill and practice (e.g., a worksheet of similar problems) on small subskills that most students have been taught many times before. . . . Moreover, those subskills are taught in decontextualized ways that fail to clarify for students the reasons for or the importance of learning these subskills.39

As curricular reforms have spread, education leaders are now paying more attention to the instruction that takes place within restructured curricular models. The Carnegie math pathway programs, for example, emphasize the notion of “productive persistence,” or the value and rewards of persisting through difficult problems. The approach is built on the work of Stanford psychologist Carol Dweck, who points out that students who fail at a challenge can react in two ways: some may think it is beyond their natural ability and not worth attempting, which she called a fixed mindset. Others may think they will eventually be able to master it if they work hard enough, a growth mindset.40 Studies have demonstrated that helping students adopt a growth mindset results in improved motivation, persistence, and eventual success. A recent report on the New Mathways Project explains that this program also emphasizes productive persistence, along with a number of other techniques that faculty feel are helpful in engaging and motivating students. For example, faculty members try to use real data sets and to contextualize math problems within real-life situations. Instructors also require students to work in small groups to solve problems, leading to active and engaging classroom sessions. Similarly, CUNY Start math classrooms emphasize contextualized, real-life situations, and the use of instructor questioning rather than lecturing.41

Many pre-requisite acceleration programs are following the lead of Chabot College’s accelerated English program, by using college-level materials and college-style pacing in developmental coursework—even for students who fall far below the developmental cutoff—while systematically supporting students’ ability to meet those high standards.42 Chabot’s accelerated program is part of the larger CAP network, which encourages instructors to pay attention not only to students’ academic needs but also to their “affective needs.”43 In particular, the network’s pedagogical practices include an emphasis on: establishing and maintaining positive relationships, providing class time to process content and practice skills, planning regular opportunities for metacognitive reflection, providing incentives and accountability for attendance and hard work, intervening at signs of struggle or disengagement, and maintaining a “growth mindset” approach to feedback and grading. In addition, faculty members try to teach the material in a context that is relevant to students, whether that be to their career goals and interests, or the wider issues of concern to students, such as community engagement and social justice.44

In K–12 mathematics instruction, instructors typically present, explain, and solve math problems without explaining the underlying concepts.45 As a result, students can pass math courses by memorizing procedures and applying them when told to do so, without quite understanding what they are doing. Students then forget what they have “learned” as soon as the course is over. To address this problem, Montgomery County Community College piloted an arithmetic course known as “Concepts of Numbers,” which re-orders the curriculum to be more conceptual. For example, early in the semester the students engage in an investigation of all real numbers—whether whole numbers, integers, rational, or irrational—and begin to understand their relationships with one another, for example, by locating where one-third, three-halves, three, and 3.14 each fall on the same number line. Concepts of Numbers also uses a “discovery-based pedagogy:” for example, it asks students to solve problems by drawing on previous mathematical experiences and knowledge before a rule is given. Students are encouraged to experiment and discover how and why algorithms are short-cuts. Results suggest that Concepts of Numbers students are more likely to earn a C or higher, less likely to withdraw from the course, and more likely to enroll in the next-level course of developmental algebra. However, they are only equal to their peers in completing developmental algebra, completing gatekeeper math, and accruing college-level credits.46 These results suggest that while students find math more relatable and engaging when it is presented conceptually, these effects wear off when they return to a more traditional math curriculum.

Toward a More Comprehensive Approach

The reforms discussed above have led to some encouraging results, but most have not led to marked increases in graduation rates. In general, reforms that focus on only one segment of a student’s experience are insufficient to improve graduation rates, because the positive benefits of any reform will quickly fade when a student returns to the wider college and its traditional un-reformed structures and practices.

…Reforms that focus on only one segment of a student’s experience are insufficient to improve graduation rates, because the positive benefits of any reform will quickly fade when a student returns to the wider college and its traditional un-reformed structures and practices.

The City University of New York has taken a more comprehensive approach called the Accelerated Study in Associate Programs (ASAP). Designed for low-income students with one or more developmental needs, the program provides dedicated advising and tutoring, a student success course, blocked or linked courses, and financial support (tied to student participation in key program services) for up to three years, while requiring students to attend school full-time. A recent random-assignment study of the program found that the program nearly doubled graduation rates: By the end of the study period, 40 percent of ASAP students had earned a degree compared to only 22 percent of the control group.47 Moreover, 25 percent of ASAP students had transferred to a four-year school, compared to 17 percent of the control group. And while ASAP is more expensive per student than regular CUNY instruction, the program’s cost per degree completion was lower, because many more ASAP students earned a degree. After the publication of these very positive results, CUNY has planned a $42 million expansion of ASAP from 4,000 students to 25,000 by 2018, including all students in the predominantly low-income Bronx Community College.48

An Ambitious Reform Agenda

Building on the developments we have described above, recently six national organizations involved in efforts to reform remedial education released a set of joint principles encouraging colleges to connect services for underprepared students more closely to the student’s college-level experience, while taking into account the student’s interests and goals.49 Their principles were:

- Every student’s postsecondary education begins with an intake process to choose an academic direction and identify the support needed to pass relevant credit-bearing gateway courses in the first year.

- Enrollment in college-level math and English courses or course sequences aligned with the student’s program of study is the default placement for the vast majority of students.

- Academic and non-academic support is provided in conjunction with gateway courses in the student’s academic or career area of interest through co-requisite or other models with evidence of success in which supports are embedded in curricula and instructional strategies.

- Students for whom the default college-level course placement is not appropriate, even with additional mandatory support, are enrolled in rigorous, streamlined remediation options that align with the knowledge and skills required for success in gateway courses in their academic or career area of interest.

- Every student is engaged with content of required gateway courses that is aligned with his or her academic program of study—especially in math.

- Every student is supported to stay on track to a college credential, from intake forward, through the institution’s use of effective mechanisms to generate, share, and act on academic performance and progression data.

While the principles provide a clear direction forward, a number of open questions remain for colleges wishing to implement these reforms.

First, how can colleges best help students choose an academic and career direction, and determine the type and level of academic skill-building and support students need to succeed on their chosen pathway? Current reform developments reflect a broad conviction that traditional assessment, placement, and course-choosing processes need to be replaced by a redesigned intake process that includes effective approaches to helping students explore options for college and careers, determining what cognitive and non-cognitive skills students will need to be successful in their field of interest, and assisting them to choose and enter college-level programs of study. For example, as part of that process, colleges need to determine whether each student should be placed in college-level courses, in a co-requisite remedial model, or in another education option. Thus colleges need to develop a wholly new intake system, and research needs to provide them with practical guidance on effective ways to do that.

Second, how can colleges effectively integrate developmental education with college-level programs of study? As we discussed above, several models are evolving across the field: New Math Pathways and Statway® have created three broad types of math pathways based on students’ academic goals; some occupational programs embed basic skills instruction in substantive material; a few colleges are experimenting with Reading Apprenticeship; and colleges with meta-majors are beginning to consider customizing services for underprepared students for different meta-majors. Which models are most effective, under what conditions, and for which types of underprepared students?

Third, how can we best support students with very weak academic skills—those who might not be successful in a co-requisite program? While the majority of students will likely benefit from enrollment in college-level courses with additional targeted assistance, some students will not be successful in college-level courses even with additional assistance. What strategies are most effective in strengthening the skills of such students, without reproducing the disconnected traditional system of remediation? There is some positive evidence for CUNY Start and other accelerated pre-requisite models; are there other promising models?

Fourth, how can we support the increasingly heterogeneous mix of students enrolled in introductory college-level courses? As it is, nearly half of community college students fail to meet academic progress requirements in their first semester; even among those deemed “college-ready,” many struggle in introductory courses that are critical to success in their major, such as introductory biology, psychology, history, economics, and anatomy and physiology, in addition to college-level English and math. For example, Biology 101 courses often have failure rates as high as or higher than that of English or Math 101.50 Reforms that place more students into college-level courses will tend to increase the heterogeneity of students in introductory college courses, making these courses even more difficult to teach. Thus the reform of developmental education will require improvements in strategies for teaching introductory college-level courses so that they can be effective both for students who previously were assigned to developmental education courses and those previously classified as college-ready.

Fifth, what are the most effective approaches to strengthening students’ non-cognitive and meta-cognitive skills? Traditional remediation is designed to address academic weaknesses in math and English, yet non-cognitive and metacognitive skill weaknesses may be more serious barriers to student success. For example, students with strong metacognitive skills have tools that are helpful in addressing academic weaknesses. But outside of a few specific programs discussed in our section on instruction, remediation reform has paid little attention to improving these crucial skills.

Sixth, how can we better understand students’ actual experience of alternative approaches to developmental education? The experience of K–12 reform makes it very clear that official policies regarding curricular and pedagogic design may never reach the classroom level; that is, there may be a large gap between what colleges “provide” and what each student experiences. Talking to students and otherwise collecting information from them is a key to better understanding how students are experiencing reforms and how to reduce gaps between policy and experience. Gathering information from students can also help us understand whether they are making informed or uninformed decisions, which is particularly important for Principle 1.

Seventh, what are the costs and return on investment for the comprehensive reforms discussed in this report? The interventions necessary to implement the reforms span a range of costs—from almost no cost to very expensive. It will be important for institutional leaders, policymakers, and funders to understand cost implications as well as the return that could be gained from each in terms of student success, subsequent earnings gains, lower social costs, and increased tax revenues.

And eighth, how should institutions, systems, and state agencies change their organizational structures, processes, and roles of personnel in order to effectively implement current reforms? What professional development opportunities must be provided for faculty, advisors, and others working with students? What types of leadership strategies can help catalyze and manage these changes? Taken together, the reforms we have discussed will require changes not only to academic support processes but also to intake systems, non-academic support structures, curricular structures, and instructional approaches. They also require supportive funding, legislation/regulation, and guidance at the system and state levels. Such comprehensive reform is unlikely to be successful without broader reforms in these political and organizational processes.

Over the next several years, a program of research and policy and practice development that addresses these open questions can build a knowledge base that can help support practitioners in their efforts to improve not only initial college-level outcomes, but also students’ graduation and transfer rates.

This report is the fifth in a series on College Completion from The Century Foundation, sponsored by Pearson. The views and opinions expressed in this paper are those of the authors and do not necessarily reflect the views or position of Pearson.

Notes

- Thomas Bailey, “Challenge and Opportunity: Rethinking the Role and Function of Developmental Education in Community College,” New Directions for Community Colleges 144 (2009): 11–30, http://ccrc.tc.columbia.edu/media/k2/attachments/challenge-and-opportunity.pdf.

- The terms “developmental education” and “remediation” have different connotations. Remediation often refers to classroom instruction while developmental education refers to the suite of services provided to students with weak academic skills. In this paper, when we talk about these services in the past, we use the work remediation since the vast majority of these services were in the form of classroom instruction.

- Judith Scott-Clayton and Olga Rodriguez, “Discouragement, or Diversion? New Evidence on the Effects of College Remediation,” NBER Working Paper No. 18328, National Bureau of Economic Research, 2012, http://www.nber.org/papers/w18328; Thomas Bailey, Shanna Smith Jaggars, and Judith Scott-Clayton, “Characterizing the Effectiveness of Developmental Education: A Response to Recent Criticism,” Journal of Developmental Education 36, no. 3 (2013): 18–27, http://ccrc.tc.columbia.edu/publications/characterizing-effectiveness-of-developmental-education.html.

- In this report, we update and draw on a book we wrote with our colleague Davis Jenkins, entitled Redesigning America’s Community Colleges: A Clearer Path to Student Success (Cambridge, Mass.: Harvard University Press, 2015). In that book, we argued for a comprehensive community college reform agenda to improve student outcomes, and included an extensive discussion of the role of developmental education reform within that strategy.

- Bailey, Jaggars, and Scott-Clayton, “Characterizing the Effectiveness of Developmental Education,” 18–27

- Bailey, Jaggars, and Jenkins, Redesigning America’s Community Colleges.

- Judith Scott-Clayton, Peter M. Crosta, and Clive Belfield, “Improving the Targeting of Treatment: Evidence from College Remediation,” Educational Evaluation and Policy Analysis, 36 no. 3 (2014): 371–393, http://epa.sagepub.com/content/36/3/371.abstract.

- Thomas Bailey, Dong Wook Jeong, and Sung-Woo Cho, “Referral, Enrollment, and Completion in Developmental Education Sequences in Community Colleges,” Economics of Education Review 29, no. 2 (2010): 255–70, http://ccrc.tc.columbia.edu/publications/referral-enrollment-completion-developmental-education.html.

- Shanna Smith Jaggars and Michelle Hodara, “The Opposing Forces That Shape Developmental Education: Assessment, Placement, and Progression at CUNY Community Colleges,” New York, NY: CCRC Working Paper no. 36, Community College Research Center, Teachers College, Columbia University, 2011, http://ccrc.tc.columbia.edu/publications/opposing-forces-developmental-education.html; Elizabeth Z. Rutschow and John Diamond, Laying the Foundations: Early Findings from the New Mathways Project (New York, N.Y.: MDRC, 2015); Matthew Zeidenberg, Davis Jenkins, and Marc Scott, “Not Just Math and English: Courses That Pose Obstacles to Community College Completion,” CCRC Working Paper no. 52, Community College Research Center, Teachers College, Columbia University, 2012, http://ccrc.tc.columbia.edu/publications/obstacle-courses-community-college-completion.html.

- David Conley, College Knowledge: What It Really Takes For Students to Succeed and What We Can Do to Get Them Ready (San Francisco, Calif.: Jossey-Bass, 2005); Melinda Karp and Rachel Bork, “‘They Never Told Me What to Expect, So I Didn’t Know What to Do’: Defining and Clarifying the Role of a Community College Student,” CCRC Working Paper no. 47, Community College Research Center, Teachers College, Columbia University, 2012, http://ccrc.tc.columbia.edu/publications/defining-role-community-college-student.html; Katherine L. Hughes and Judith Scott-Clayton, “Assessing Developmental Assessment in Community Colleges (Assessment of Evidence Series),” CCRC Working Paper no. 19, Community College Research Center, Teachers College, Columbia University, 2011, http://ccrc.tc.columbia.edu/publications/assessing-developmental-assessment.html.

- Norton Grubb with Robert Gabriner, Basic Skills Education in Community Colleges: Inside and Outside of Classrooms (New York, N.Y.: Routledge, 2013).

- Michelle Hodara, Shanna Smith Jaggars and Melinda Karp, “Improving Developmental Education Assessment and Placement: Lessons From Community Colleges Across the Country,” CCRC Working Paper no. 51, Community College Research Center, Teachers College, Columbia University, 2012, http://ccrc.tc.columbia.edu/publications/developmental-education-assessment-placement-scan.html; Hoori Kalamkarian, Julia Raufman, and Nikki Edgecombe, Statewide Developmental Education Reform: Early Implementation in Virginia and North Carolina (New York, N.Y.: Community College Research Center, Teachers College, Columbia University, 2015).

- Hodara, Jaggars, and Karp, “Improving Developmental Education Assessment and Placement.”

- Judith Scott-Clayton, “Do High-Stakes Exams Predict College Success?” CCRC Working Paper no. 41, Community College Research Center, Teachers College, Columbia University, 2012, http://ccrc.tc.columbia.edu/publications/high-stakes-placement-exams-predict.html.

- Shanna Smith Jaggars, Michelle Hodara, Sung-Woo Cho, and Di Xu, “Three Accelerated Developmental Education Programs: Features, Student Outcomes, and Implications,” Community College Review 43, no. 1 (2015): 3–26, http://ccrc.tc.columbia.edu/publications/three-accelerated-developmental-education-programs.html; Olga Rodriguez, “Increasing Access to College-Level Math: Early Outcomes Using the Virginia Placement Test,” CCRC Brief no. 58, Community College Research Center, Teachers College, Columbia University, 2014, http://ccrc.tc.columbia.edu/publications/increasing-access-to-college-level-math.html.

- Scott-Clayton, Crosta, Belfield, “Improving the Targeting of Treatment.”

- Ross Markle, Margarita Olivera-Aguilar, Teresa Jackson and Richard Noeth, Examining Evidence of Reliability, Validity, and Fairness for the SuccessNavigator™ Assessment (Princeton, N.J.: Educational Testing Service, 2013).

- Long Beach City College, “Promise Pathways,” Long Beach, CA, http://lbcc.edu/promisepathways/.

- Thomas Bailey, Lisa Chapman, Susan Burleson and Ed Bowling, “Multiple Measures for Placement: Expanding Policies to Promote Student Success” (presentation, Annual Convention of the American Association of Community Colleges, San Antonio, Texas, April 19, 2015.

- Elisabeth A. Barnett, Alexander K. Mayer, and Alyssa Ratledge, “What’s Next for Developmental Reform? Research Agenda of the Center for Analysis of Postsecondary Readiness,” presented at Achieving the Dream Annual Institute on Student Success, February 18, 2015, Baltimore, MD

- Sung-Woo Cho, Elizabeth Kopko, Davis Jenkins, and Shanna Smith Jaggars, “New Evidence of Success for Community College Remedial English Students: Tracking the Outcomes of Students in the Accelerated Learning Program (ALP),” Community College Research Center, Teachers College, Columbia University, December 2012.

- Shanna Smith Jaggars, Michelle Hodara, Sung-Woo Cho, and Di Xu, “Three Accelerated Developmental Education Programs: Features, Student Outcomes, and Implications,” Community College Review 43 no. 1 (January 2015): 3–26.

- See “Complete College Tennessee,” Tennessee Higher Education Commission, http://thec.ppr.tn.gov/THECSIS/CompleteCollegeTN/Default.aspx.

- Clive Belfield, Davis Jenkins, and Hana Lahr, “Is Corequisite Remediation Cost-Effective? Early Findings from Tennessee,” Community College Research Center, Teachers College, Columbia University, April 2016; “Co-requisite Remediation Pilot Study, Fall 2014” Tennessee Board of Regents, Office of the Vice Chancellor for Academic Affairs, 2015.

- “Corequisite Remediation: Spanning the Completion Divide Breakthrough Results Fulfilling The Promise of College Access for Underprepared Students,” Complete College America, 2016, completecollege.org/spanningthedivide/#home.

- “Corequisite Remediation: Spanning the Completion Divide Breakthrough Results Fulfilling The Promise of College Access for Underprepared Students,” Complete College America, 2016, http://completecollege.org/spanningthedivide/wp-content/uploads/2016/01/CCA-SpanningTheDivide-ExecutiveSummary.pdf.

- “Co-requisite Remediation Pilot Study, Fall 2014” and ““Co-requisite Remediation Pilot Study, Spring 2015,” Tennessee Board of Regents, Office of the Vice Chancellor for Academic Affairs, 2015.

- See “CUNY Start,” The City University of New York, http://www2.cuny.edu/academics/academic-programs/model-programs/cuny-college-transition-programs/cuny-start/.

- Using a propensity score matching technique. Drew Allen, “Understanding Multiple Developmental Education Pathways for Underrepresented Student Populations: Findings from New York City,” PhD dissertation, New York University, 2015.

- Matthew Zeidenberg, Sung-Woo Cho and Davis Jenkins, “Washington State’s Integrated Basic Education and Skills Training Program (I-BEST): New Evidence of Effectiveness,” CCRC Working Paper no. 20, Community College Research Center, Teachers College, Columbia University, 2010, http://ccrc.tc.columbia.edu/publications/i-best-new-evidence.html.

- Craig Hayward, Terrence Willett, and Senior Researchers, Curricular Redesign and Gatekeeper Completion: A Multi-College Evaluation of the California Acceleration Project (Berkeley, Calif.: The Research & Planning Group for California Community Colleges, 2014); Hiroyuki Yamada, Community College Pathways’ Program Success: Assessing the First Two Years’ Effectiveness Of Statway® (Stanford, Calif.: Carnegie Foundation for the Advancement of Teaching, 2014), http://www.carnegiefoundation.org/resources/publications/ccp-success-assessing-the-first-two-years-effectiveness-of-statway/; Nicole Sowers and Hiroyuki Yamada, Pathways Impact Report (Stanford, Calif.: Carnegie Foundation for the Advancement of Teaching, 2015), http://www.carnegiefoundation.org/resources/publications/pathways-impact-report-2013-14/.

- Rutschow and Diamond, Laying the Foundations.

- Yamada, Community College Pathways’ Program Success.

- Ibid.

- Hayward, Willett, et al., Curricular Redesign and Gatekeeper Completion.

- WestEd, “Leader Colleges Meet Campus Goals with Reading Apprenticeship,” Readingapprenticeship.org/success-stories/leader-colleges-meet-campus-goals-with-reading-apprenticeship.

- Cynthia Greenleaf, Thomas Hanson, Joan Herman, Cindy Litman, Sarah Madden, Rachel Rosen, Christy Boscardin, Steve Schneider, and David Silver, Integrating Literacy and Science Instruction In High School Biology: Impact on Teacher Practice, Student Engagement, and Student Achievement (Oakland and Los Angeles, Calif.: WestEd and University of California Los Angeles, 2009), http://readingapprenticeship.org/wp-content/uploads/2014/01/NSF-biology-final-report.pdf; Cynthia Greenleaf, Thomas Hanson, Joan Herman, Cindy Litman, Rachel Rosen, Steve Schneider, and David Silver, A Study of the Efficacy of Reading Apprenticeship Professional Development For High School History and Science Teaching and Learning (Oakland and Los Angeles, Calif.: WestEd and University of California Los Angeles, 2011), http://readingapprenticeship.org/wp-content/uploads/2013/12/IES-history-biology-final-report1.pdf.

- Bailey, Jaggars, and Jenkins, Redesigning America’s Community Colleges.

- Grubb with Gabriner, Basic Skills Education in Community Colleges.

- Carol Dweck, Mindset: The New Psychology of Success (New York, N.Y.: Ballantine Books, 2006).

- Rutschow and Diamond, Laying the Foundations.

- Nikki Edgecombe, Shanna Smith Jaggars, Di Xu, and Melissa Barragan, “Accelerating the Integrated Instruction of Developmental Reading and Writing at Chabot College,” CCRC Working Paper no. 71, Community College Research Center, Teachers College, Columbia University, 2014, http://ccrc.tc.columbia.edu/publications/accelerating-integrated-instruction-at-chabot.html.

- Katie Hern and Myra Snell, Toward a Vision of Accelerated Curriculum and Pedagogy: High Challenge, High Support Classrooms for Underprepared Students (Oakland, Calif.: LearningWorks, 2013).

- Hayward, Willett, et al., Curricular Redesign and Gatekeeper Completion.

- James Hiebert, Ronald Gallimore, Helen Garnier, Karen Bogard Givvin, Hilary Hollingsworth, Jennifer Jacobs, et al., Teaching mathematics in seven countries: Results from TIMSS 1999 video study, NCES 2003-103, revised (Washington, D.C.: U.S. Department of Education, Institute of Education Sciences, National Center for Education Statistics, 2003), http://nces.ed.gov/pubs2003/2003013.pdf.

- Susan Bickerstaff, Barbara Lontz, Maria S. Cormier, and Di Xu, “Redesigning Arithmetic for Student Success: Supporting Faculty to Teach In New Ways,” New Directions for Community Colleges 2014 no. 167 (2014): 5–14, http://onlinelibrary.wiley.com/doi/10.1002/cc.20106/abstract.

- Susan Scrivener, Michael J. Weiss, Alyssa Ratledge, Timothy Rudd, Colleen Sommo, and Hannah Fresques, Doubling Graduation Rates: Three-year Effects of CUNY’s Accelerated Studies in Associate Programs (ASAP) for Developmental Education Students (New York, N.Y.: MDRC, 2015), http://www.mdrc.org/sites/default/files/doubling_graduation_rates_fr.pdf.

- Lisa L. Colangelo and Ben Chapman, “CUNY Unveils $42M Plan to Boost Graduation Rates,” Daily News (New York), October 16, 2015, http://www.nydailynews.com/new-york/exclusive-cuny-42m-plan-boost-grad-rates-article-1.2399541.

- Core Principles for Transforming Remediation within a Comprehensive Student Success Strategy. Achieving the Dream, the American Association of Community Colleges, the Charles A. Dana Center (developer of NMP), Complete College America, the Education Commission of the States, and Jobs for the Future

- Zeidenberg, Jenkins, and Scott, “Not Just Math and English.”